Thursday, January 28, 2021

Struggling to install Python in Windows 10

Posted by

Teck

at

1/28/2021 09:59:00 PM

0

comments

![]()

Labels: Programming

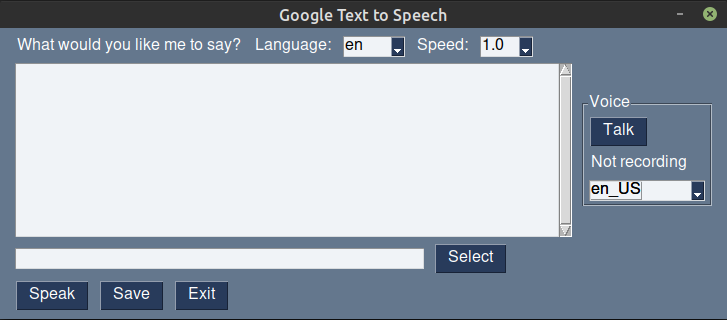

GUI for Google's text-to-speech service

Posted by

Teck

at

1/28/2021 12:01:00 AM

0

comments

![]()

Labels: Open source, Programming

Thursday, January 21, 2021

Someday ft. Emarie (insert song for Cynthia's arc in Great Pretender)

Composed & Arranged by Yutaka Yamada

Performed by YVY

But I'm not gonna

Pretend I'm all better

Thought we were forever

Hearts still in pieces

And your shadow follows me places, all the time

I can't shake this feeling of loneliness

That's just life I guess

But I believe, someday soon

I'll learn to overcome it

The scars will heal, heart will bloom

No more dwelling

'Cause today I choose to say

"I will love again someday"

So I'm not gonna stay

Paralyzed 'cause I'm not by your side

Time passes slowly

Makes me feel lonely

Gotta stop feeling sorry

I can't always feel worried

Mind won't stop spinning

And I keep on overthinking, all the time

I hope with time the healing will mend my soul

And I can let you go

But I believe, someday soon

I'll learn to overcome it

The scars will heal, heart will bloom

No more dwelling

'Cause today I choose to say

"I will love again someday"

So I'm not gonna stay

Paralyzed 'cause I'm not by your side

But I believe, that I will be, much better eventually

But I won't forget our love, ever

Time heals all, know that time heals all

I believe that someday soon

I'll remeber you with a smile

And overcome

And I will overcome

Someday soon I'll overcome

Someday soon I'll Over, over-come

I choose to say

"I will love again someday, again someday,"

I'll overcome

Lyric source: https://www.animesonglyrics.com/great-pretender/someday

Posted by

Teck

at

1/21/2021 01:00:00 PM

0

comments

![]()

Labels: Songs

Wednesday, January 20, 2021

Creating a font using FontForge

Posted by

Teck

at

1/20/2021 11:45:00 PM

0

comments

![]()

Labels: Open source

Tuesday, January 19, 2021

Platinum fountain pen sold at Daiso

Posted by

Teck

at

1/19/2021 10:01:00 AM

0

comments

![]()

Labels: Miscellaneous

Sunday, January 17, 2021

Daiso fountain pens

Posted by

Teck

at

1/17/2021 11:03:00 PM

0

comments

![]()

Labels: Miscellaneous

Friday, January 15, 2021

Tellsis language ("Nunkish") translator written in Python3

Nun posuk Gilbert ui gikapmarikon

$ ./telsistrans.py -t "Posuk \\Gilbert\\ nunki." -sl telsis

Thank you Major Gilbert.

Source language: en

Input source text: I love you

Target language:

In Tamil script: நான் உன்னை நேசிக்கிறேன்

Pronunciation: Nāṉ uṉṉai nēcikkiṟēṉ

In unaccented characters: Nan unnai necikkiren

In target language: Nun annui noyirrikon

Source language: telsis

Input source text: Nunki posuk

Target language: ja

In Tamil script: நன்றி மேஜர்

Pronunciation:

In unaccented characters: Nanri mejar

In target language: ありがとう少佐

translator = telsis_translator()

srctext = "I love you"

srclang = 'en'

translator.lang2telsis(srctext, srclang)

print(translator.results['tgt_text']) # Print out results of translation

srctext = "Nunki posuk"

tgtlang = 'ja'

translator.telsis2lang(srctext, tgtlang)

print(translator.results['tgt_text']) # Print out results of translation

ありがとう少佐

unidecode (for converting to unaccented characters)

requests (for conversion to Tamil script)

Pillow (for rendering in Tellsis font)

I tried a Kivy version too, but the default theme is a bit dark and I still haven't figured out how to change the theme, so it will be shelved for a while.

Posted by

Teck

at

1/15/2021 11:52:00 PM

3

comments

![]()

Labels: Open source, Programming, Violet Evergarden

Monday, January 11, 2021

A quiet day in Yokohama's Chinatown

Posted by

Teck

at

1/11/2021 09:53:00 PM

0

comments

![]()

Labels: Miscellaneous

PlatformIO project that can also be used in Arduino IDE

src_dir = MySketch

Posted by

Teck

at

1/11/2021 05:25:00 PM

0

comments

![]()

Labels: Open source, Programming

Sunday, January 10, 2021

Saturday, January 09, 2021

Display WiFi RSSI, time, and date on M5Stick C

My sketch shows the RSSI (in dBm) in a color code. Green for good, yellow for moderate, and red for poor. The RSSI for changing from good to moderate signal is set at -60 dBm and from moderate to poor at -70 dBm. I decided on these figures after looking at the table in this article.

Put configuration settings in a separate config.h file that contains

#define TIMEZONE 9

#define WIFI_SSID "your_wifi_ssid"

#define WIFI_PASSWORD "your_wifi_password"

TIMEZONE is the number of hours ahead of GMT (use negative number if behind GMT)

WIFI_SSID is SSID to connect to

WIFI_PASSWORD is password for SSID

#include <M5StickC.h>

#include <WiFi.h>

#include "time.h"

#include "config.h"

#include <ArduinoOTA.h>

// default hostname if not defined in config.h

#ifndef HOSTNAME

#define HOSTNAME "m5stickc"

#endif

// use the WiFi settings in config.h file

char* ssid = WIFI_SSID;

char* password = WIFI_PASSWORD;

// define the NTP server to use

char* ntpServer = "ntp.nict.jp";

// define what timezone you are in

int timeZone = TIMEZONE * 3600;

// delay workarround

int tcount = 0;

// LCD Status

bool LCD = true;

RTC_TimeTypeDef RTC_TimeStruct;

RTC_DateTypeDef RTC_DateStruct;

//delays stopping usualy everything using this workarround

bool timeToDo(int tbase) {

tcount++;

if (tcount == tbase) {

tcount = 0;

return true;

} else {

return false;

}

}

// Syncing time from NTP Server

void timeSync() {

M5.Lcd.setTextSize(1);

Serial.println("Syncing Time");

Serial.printf("Connecting to %s ", ssid);

M5.Lcd.fillScreen(BLACK);

M5.Lcd.setCursor(20, 15);

M5.Lcd.println("connecting WiFi");

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED) {

delay(500);

Serial.print(".");

}

Serial.println(" CONNECTED");

M5.Lcd.fillScreen(BLACK);

M5.Lcd.setCursor(20, 15);

M5.Lcd.println("Connected");

// Set ntp time to local

configTime(timeZone, 0, ntpServer);

// Get local time

struct tm timeInfo;

if (getLocalTime(&timeInfo)) {

// Set RTC time

RTC_TimeTypeDef TimeStruct;

TimeStruct.Hours = timeInfo.tm_hour;

TimeStruct.Minutes = timeInfo.tm_min;

TimeStruct.Seconds = timeInfo.tm_sec;

M5.Rtc.SetTime(&TimeStruct);

RTC_DateTypeDef DateStruct;

DateStruct.WeekDay = timeInfo.tm_wday;

DateStruct.Month = timeInfo.tm_mon + 1;

DateStruct.Date = timeInfo.tm_mday;

DateStruct.Year = timeInfo.tm_year + 1900;

M5.Rtc.SetData(&DateStruct);

Serial.println("Time now matching NTP");

M5.Lcd.fillScreen(BLACK);

M5.Lcd.setCursor(20, 15);

M5.Lcd.println("S Y N C");

delay(500);

M5.Lcd.fillScreen(BLACK);

}

}

void buttons_code() {

// Button A control the LCD (ON/OFF)

if (M5.BtnA.wasPressed()) {

if (LCD) {

M5.Lcd.writecommand(ST7735_DISPOFF);

M5.Axp.ScreenBreath(0);

LCD = !LCD;

} else {

M5.Lcd.writecommand(ST7735_DISPON);

M5.Axp.ScreenBreath(255);

LCD = !LCD;

}

}

// Button B doing a time resync if pressed for 2 sec

if (M5.BtnB.pressedFor(2000)) {

timeSync();

}

}

// Printing WiFi RSSI and time to LCD

void doTime() {

//if (timeToDo(1000)) {

vTaskDelay(1000 / portTICK_PERIOD_MS);

M5.Lcd.setCursor(10, 10);

M5.Lcd.setTextSize(1);

M5.Lcd.printf("%s: ", WiFi.SSID());

long strength = WiFi.RSSI();

if(strength < -70) M5.Lcd.setTextColor(RED, BLACK);

else if(strength < -60) M5.Lcd.setTextColor(YELLOW, BLACK);

else M5.Lcd.setTextColor(GREEN, BLACK);

M5.Lcd.printf("%02d\n", strength);

M5.Lcd.setTextSize(3);

M5.Lcd.setTextColor(WHITE, BLACK);

M5.Rtc.GetTime(&RTC_TimeStruct);

M5.Rtc.GetData(&RTC_DateStruct);

M5.Lcd.setCursor(10, 25);

M5.Lcd.printf("%02d:%02d:%02d\n", RTC_TimeStruct.Hours, RTC_TimeStruct.Minutes, RTC_TimeStruct.Seconds);

M5.Lcd.setCursor(15, 60);

M5.Lcd.setTextSize(1);

M5.Lcd.setTextColor(WHITE, BLACK);

M5.Lcd.printf("Date: %04d-%02d-%02d\n", RTC_DateStruct.Year, RTC_DateStruct.Month, RTC_DateStruct.Date);

//}

}

void setup() {

M5.begin();

M5.Lcd.setRotation(1);

M5.Lcd.fillScreen(BLACK);

M5.Lcd.setTextSize(1);

M5.Lcd.setTextColor(WHITE,BLACK);

timeSync(); //uncomment if you want to have a timesync everytime you turn device on (if no WIFI is avail mostly bad)

// Port defaults to 3232

// ArduinoOTA.setPort(3232);

// Hostname defaults to esp3232-[MAC]

ArduinoOTA.setHostname(HOSTNAME);

// No authentication by default

// ArduinoOTA.setPassword("admin");

// Password can be set with it's md5 value as well

// MD5(admin) = 21232f297a57a5a743894a0e4a801fc3

// ArduinoOTA.setPasswordHash("21232f297a57a5a743894a0e4a801fc3");

ArduinoOTA

.onStart([]() {

String type;

if (ArduinoOTA.getCommand() == U_FLASH)

type = "sketch";

else // U_SPIFFS

type = "filesystem";

// NOTE: if updating SPIFFS this would be the place to unmount SPIFFS using SPIFFS.end()

Serial.println("Start updating " + type);

})

.onEnd([]() {

Serial.println("\nEnd");

})

.onProgress([](unsigned int progress, unsigned int total) {

Serial.printf("Progress: %u%%\r", (progress / (total / 100)));

})

.onError([](ota_error_t error) {

Serial.printf("Error[%u]: ", error);

if (error == OTA_AUTH_ERROR) Serial.println("Auth Failed");

else if (error == OTA_BEGIN_ERROR) Serial.println("Begin Failed");

else if (error == OTA_CONNECT_ERROR) Serial.println("Connect Failed");

else if (error == OTA_RECEIVE_ERROR) Serial.println("Receive Failed");

else if (error == OTA_END_ERROR) Serial.println("End Failed");

});

ArduinoOTA.begin();

}

void loop() {

M5.update();

buttons_code();

doTime();

ArduinoOTA.handle();

}

Posted by

Teck

at

1/09/2021 05:23:00 PM

0

comments

![]()

Labels: Open source, Programming

Friday, January 08, 2021

Prevent .xsession-error file from growing too big

Posted by

Teck

at

1/08/2021 10:46:00 AM

0

comments

![]()

Labels: Open source

Thursday, January 07, 2021

Seven-herb porridge 七草粥

Posted by

Teck

at

1/07/2021 11:01:00 PM

0

comments

![]()

Labels: Miscellaneous

HDMI capture using Raspberry Pi 4

usb 1-1.2: New USB device found, idVendor=534d, idProduct=2109, bcdDevice=21.00

usb 1-1.2: New USB device strings: Mfr=1, Product=2, SerialNumber=0

usb 1-1.2: Product: USB3. 0 capture

usb 1-1.2: Manufacturer: MACROSILICON

hid-generic 0003:534D:2109.0005: hiddev97,hidraw4: USB HID v1.10 Device [MACROSILICON USB3. 0 capture] on usb-0000:01:00.0-1.2/input4

uvcvideo: Found UVC 1.00 device USB3. 0 capture (534d:2109)

usbcore: registered new interface driver uvcvideo

USB Video Class driver (1.1.1)

usbcore: registered new interface driver snd-usb-audio

Type: Video Capture

[0]: 'MJPG' (Motion-JPEG, compressed)

Size: Discrete 1920x1080

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1600x1200

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1360x768

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1280x960

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1024x768

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 800x600

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 720x576

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 720x480

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.017s (60.000 fps)

Interval: Discrete 0.020s (50.000 fps)

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

[1]: 'YUYV' (YUYV 4:2:2)

Size: Discrete 1920x1080

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 1600x1200

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 1360x768

Interval: Discrete 0.125s (8.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.125s (8.000 fps)

Size: Discrete 1280x960

Interval: Discrete 0.125s (8.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1024x768

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 800x600

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 720x576

Interval: Discrete 0.040s (25.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 720x480

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Interval: Discrete 0.050s (20.000 fps)

Interval: Discrete 0.100s (10.000 fps)

Interval: Discrete 0.200s (5.000 fps)

v4l2-ctl -d /dev/video0 --set-ctrl=contrast=148

v4l2-ctl -d /dev/video0 --set-ctrl=saturation=127

Posted by

Teck

at

1/07/2021 02:03:00 PM

0

comments

![]()

Labels: Open source

Tuesday, January 05, 2021

Nice quotes from Violet Evergarden the Movie (11th viewing)

Anyway, for this post, I will be focusing on quotes from the movie that I like. Plus some miscellaneous findings. As usual, I was 😭 throughout the movie.

「強く願ってもかなわない思いはどうすればよいのでしょうか」

"Wishes come true if one hopes for them strong enough."

"What should one do if one's wish will not come true no matter how strong one hopes?"

"Forgetting is difficult... As long as I am alive... Forgetting is... impossible."

Posted by

Teck

at

1/05/2021 11:30:00 PM

0

comments

![]()

Labels: Movies, Violet Evergarden

Monday, January 04, 2021

Mori no Gakkou (2002 film)

Posted by

Teck

at

1/04/2021 11:38:00 PM

0

comments

![]()

Labels: Movies

Updated "Nunkish" translator script

import requests

translator = google_translator()

alphabet = {

'a': 'u',

'b': '',

'c': 'y',

'd': '',

'e': 'o',

'f': '',

'g': 'v',

'h': 't',

'i': 'i',

'j': '',

'k': 'r',

'l': 'i',

'm': 'p',

'n': 'n',

'o': 'e',

'p': 'm',

'q': 'l',

'r': 'k',

's': 'y',

't': 'h',

'u': 'a',

'v': 'g',

'w': '',

'x': '',

'y': 'c',

'z': '',

'A': 'U',

'B': '',

'C': 'Y',

'D': '',

'E': 'O',

'F': '',

'G': 'V',

'H': 'T',

'I': 'I',

'J': '',

'K': 'R',

'L': 'I',

'M': 'P',

'N': 'N',

'O': 'E',

'P': 'M',

'Q': 'L',

'R': 'K',

'S': 'Y',

'T': 'H',

'U': 'A',

'V': 'G',

'W': '',

'X': '',

'Y': 'C',

'Z': '',

' ': ' ',

'0': '0',

'1': '1',

'2': '2',

'3': '3',

'4': '4',

'5': '5',

'6': '6',

'7': '7',

'8': '8',

'9': '9',

}

tamil_script_url = 'https://inputtools.google.com/request?text={text}&itc=ta-t-i0-und'

source_language = 'ta'

target_language = 'en'

def trans(nunkish):

tamil = ""

for char in nunkish:

if char not in alphabet:

tamil += char

else:

tchar = alphabet[char]

if tchar:

tamil += tchar

else:

tamil += "?"

print(f"Converted to tamil: {tamil}")

tamil_res = requests.get(tamil_script_url.format(text=tamil), headers={

'Content-Type': 'application/json'

}).json()

if (tamil_res[0] == 'SUCCESS'):

tamil_script = tamil_res[1][0][1][0]

print(f"In Tamil script: {tamil_script}")

return translator.translate(f'{tamil_script}', lang_src=source_language, lang_tgt=target_language)

while True:

nunkish = input("Input nunkish: ")

print(f"You entered: {nunkish}")

print(trans(nunkish.replace('\n', ' ').replace('\r', '')))

Posted by

Teck

at

1/04/2021 11:12:00 AM

0

comments

![]()

Labels: Open source, Programming, Violet Evergarden